This post is a follow up to my post about enriching Sentinel via MS Graph here and in response to the community post here – how do we create dynamic Watchlists of high value groups and their members. There are a couple of ways to do this, you can either use the Azure Sentinel Logic App or the Watchlist API. For this example we will use the Logic App. Let’s start with some test users and groups, for this example our test user 1 and 2 are in privileged group 1 and test user 2 are in privileged group 2.

First let’s start by retrieving the group ids from the MS Graph, we have to do this because Azure AD group names are not unique, but the object ids are. You will need an Azure AD app registration with sufficient privilege to read the directory, directory.read.all is more than enough but depending on what other tasks your app is doing, may be too much. As always, least privilege! Grab the client id, the tenant id and secret to protect your app. How you do your secrets management is up to you, I use an Azure Key Vault because Logic Apps has a native connector to it which uses Azure AD managed identity to authenticate itself.

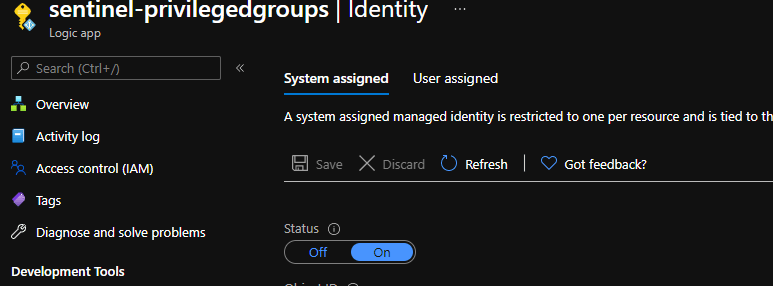

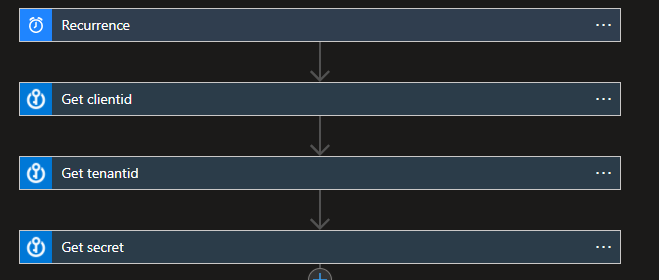

First create our playbook/Logic App and set the trigger to recurrence, since this job we will probably want to run every few hours or daily or whatever suits you. If you are using an Azure Key Vault, give the Logic App a managed identity under the identity tab.

Then give the managed identity for your Logic App the ability to list & read secrets on your Key Vault by adding an access policy

First we need to call the MS Graph to get the group ids of our privileged groups, we can’t use the Azure AD Logic App connector yet because that requires the object ids, and we want to do something more dynamic that will pick up new groups for us automatically. Let’s use the Key Vault connector to get our client id, tenant id and secret, and we connect using the managed identity.

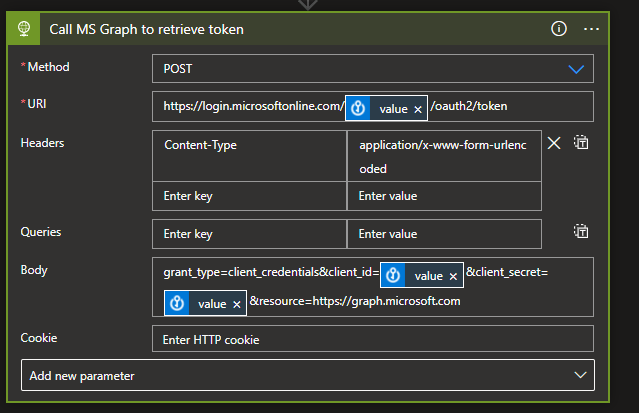

Next we POST to the MS Graph to get an access token

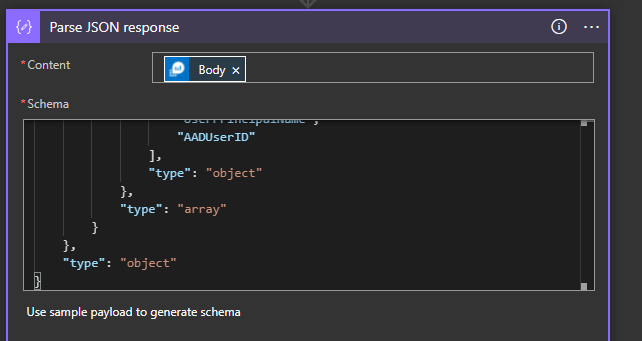

Add the secrets from your Key Vault, your tenantid goes into the URI, then clientid and secret into the body. We want to parse the JSON response to get our token for re-use. The schema for the response is

{

"properties": {

"access_token": {

"type": "string"

},

"expires_in": {

"type": "string"

},

"expires_on": {

"type": "string"

},

"ext_expires_in": {

"type": "string"

},

"not_before": {

"type": "string"

},

"resource": {

"type": "string"

},

"token_type": {

"type": "string"

}

},

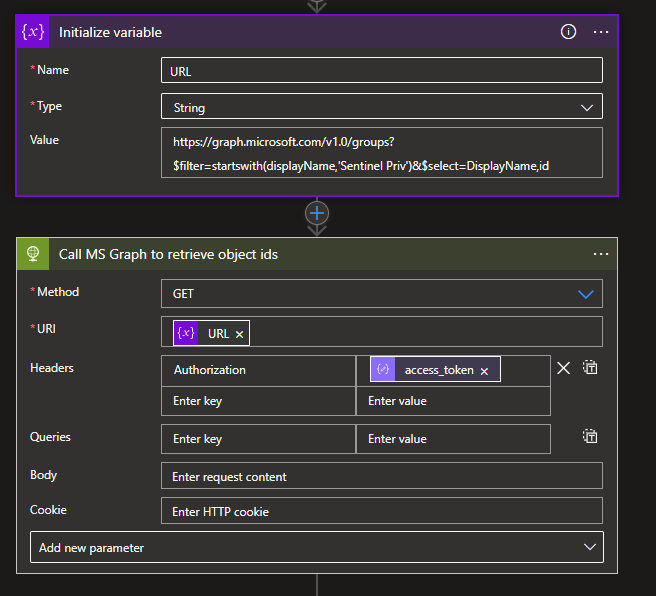

"type": "object"

}Now we use that token to call the MS Graph to get our object ids. The Logic App HTTP action is picky about URI syntax so sometimes you just have to add your URL to a variable and input it into the HTTP action, our query will search for any groups starting with ‘Sentinel Priv’ but you could search on whatever makes sense for you.

When building these Logic Apps, testing a run and checking the outputs is often valuable to make sure everything is working, if we trigger our app now we can see the output we are expecting

{

"@odata.context": "https://graph.microsoft.com/v1.0/$metadata#groups(displayName,id)",

"value": [

{

"displayName": "Sentinel Privileged Group 1",

"id": "54bb0603-7d02-40a1-874d-2dc26010c511"

},

{

"displayName": "Sentinel Privileged Group 2",

"id": "e5efe4a4-51e0-4ed7-96b5-9d77ffb7ab74"

}

]

}We will need to leverage the ids from this response, so parse the JSON using the following schema

{

"properties": {

"@@odata.context": {

"type": "string"

},

"value": {

"items": {

"properties": {

"displayName": {

"type": "string"

},

"id": {

"type": "string"

}

},

"required": [

"displayName",

"id"

],

"type": "object"

},

"type": "array"

}

},

"type": "object"

}Next we need to manually go create our Watchlist in Sentinel that we want to update. For this example we have created the PrivilegedUsersGroups watchlist using a little sample data

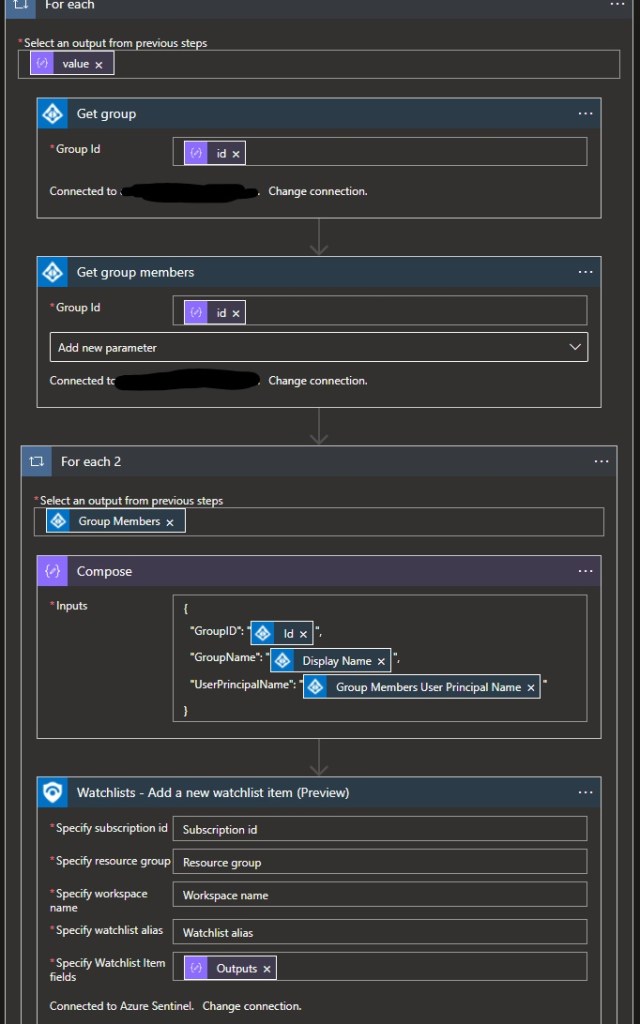

Finally we iterate through our groups ids we retrieved from the MS Graph, create a JSON payload and use the Add a new watchlist item Logic App, you will need to add your Sentinel workspace details in here of course.

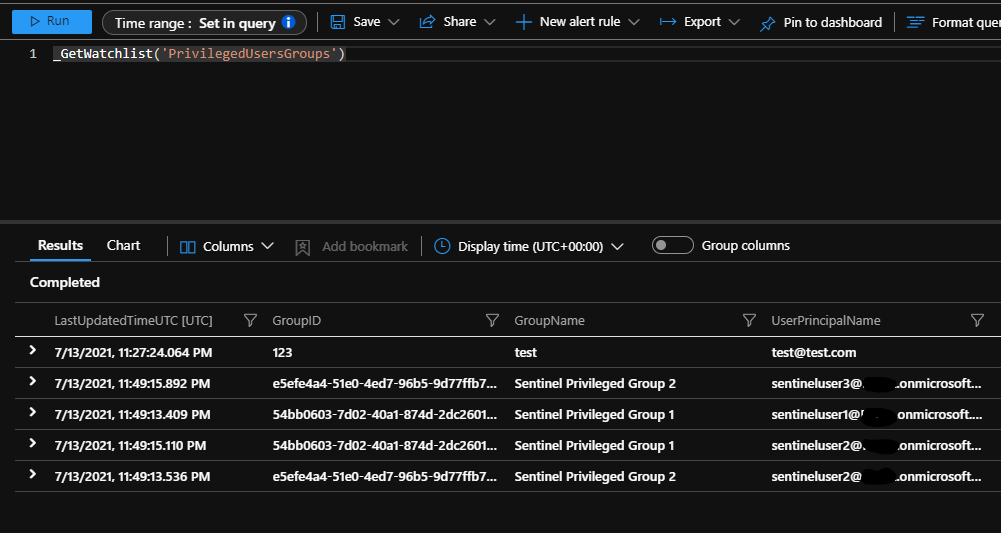

When we run our Logic App, it should now insert the members to our watchlist, including their UPN, the group name and group id.

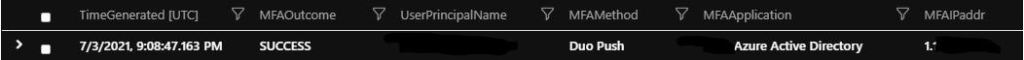

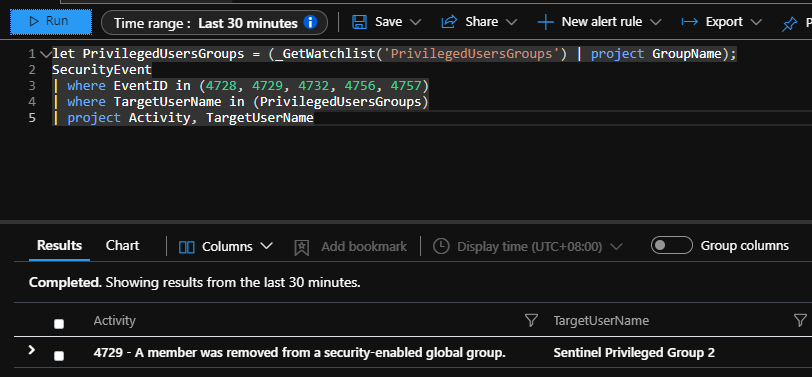

We can clean up the test data if we want, but now we can query on events related to those via our watchlist

let PrivilegedUsersGroups = (_GetWatchlist('PrivilegedUsersGroups') | project GroupName);

SecurityEvent

| where EventID in (4728, 4729, 4732, 4756, 4757)

| where TargetUserName in (PrivilegedUsersGroups)

| project Activity, TargetUserName

The one downside to using Watchlists in this way is that the Logic App cannot currently remove items, so when you run it each time it will add the same members again. It isn’t the end of the world though, you can just query the watchlist on its latest updated time, if your Logic App runs every four hours, then just query the last four hours of items.

_GetWatchlist('PrivilegedUsersGroups') | where LastUpdatedTimeUTC > ago (4h)